Why Every API Needs a Clock?

Why Every API Needs a Clock?

On this page

- Why every APIs need a clock

- It’s like the 1980s again

- API rate-limits - adding clock to your API

- Token bucket - select your token and select your bucket size

- Rate-limit in Enroute Universal Gateway

- Examples

- Rate-limiting is fundamental to APIs

- Run rate-limit anywhere along your traffic path

- Configuration on Enroute Universal Gateway for above mentioned rate-limits

- Conclusion

Why every APIs need a clock

When Van Jacobson couldn’t upload documents in 1985, he found that the network throughput had stalled to 1 bit per second.

“The problem was that we had no clock at startup. We had to build a clock”

The clock would help slow down the startup process. That’s how slow start came about along with other congestion control algorithms.

TCP bit throttling needed a clock. Are APIs any different? Can your APIs get congested too?

It’s like the 1980s again

When nodes retried sending packets, they ended up sending more bits on the network. Retries were choking the pipe. The solution was to protect the shared resource. In the API economy, the APIs are the shared resource for all your customers. Can one or a few actors choke your resource?

All bits and participants in congestion control are created equal, but not all APIs and it’s callers are the same. There are a lot of attributes (beyond just bits that TCP had) to work with. But at a high level, there is the business need, the infrastructure that runs the API and the caller of the API.

The rate-limiting choices are dictated by multiple factors unlike for TCP, however the basic premise still remains the same - use a clock to count and limit access to a shared resource.

Slow start and congestion control was encoded in the TCP/IP stack and run by every computer that wanted to get on the internet. But can you say that for every user who is calling your API?

Today your APIs are called by a variety of actors. Be it an end-user, a business process, a script, an automation framework, an eventing mechanism, notifications from external sources or other sources. How do you ensure that all of them are good citizens and won’t bring your API down?

API rate-limits - adding clock to your API

Clock in slow start was used to count the bits sent over the network.

But what attributes should be counted for an API? At L7, what is your metaphorical pipe? Is it a specific path/route? Is it a specific upstream?

And what do you count? Do you count the number of requests? Or number of requests coming from a specific location? Or number of requests destined for a specific pipe? And are all requests created equal? When you are congested, do you want to treat GET and POST the same way?

Token bucket - select your token and select your bucket size

A simple token bucket algorithm can be used to achieve rate-limiting for APIs. We map “what do you count” to a token. A token could be built from an incoming HTTP header that maps to a user, or using the location by performing a geo-lookup on the IP to identify the location. Alternatively, a token could be built from the destination for the request. L7 attributes can be used to mix and match to build a token.

The bucket could be the number of tokens we wish to count per second, per minute, per hour etc. Using these two we could perform (token)/(bucket) for a specific route or upstream.

Rate-limit in Enroute Universal Gateway

Enroute provides the granularity to perform rate-limiting at the route level. You could decide what tokens to send to the rate-limit engine at the route-level. For instance, you could send the IP address of the incoming connection or a specific header like authentication header to the rate-limit engine.

Once the rate-limit engine receives these tokens, it can either directly use these tokens or concatenate and build a new token. Counting is then performed using redis on the basis of this new token.

The bucket granularity can be a second, minute or an hour.

Rules for the rate-limit engine let you specify how to construct a token and define a bucket.

Examples

We look at a few examples of rate-limiting on how it is documented and see how it maps to our system. We’ll evaluate how Ebay and Pinterest achieve it.

We only map these use-cases to tokens sent to the rate-limit engine. Details on how to achieve this using actual code can be found in the article on rate-limiting for Enroute Standalone Gateway and Enroute Kubernetes Ingress Gateway.

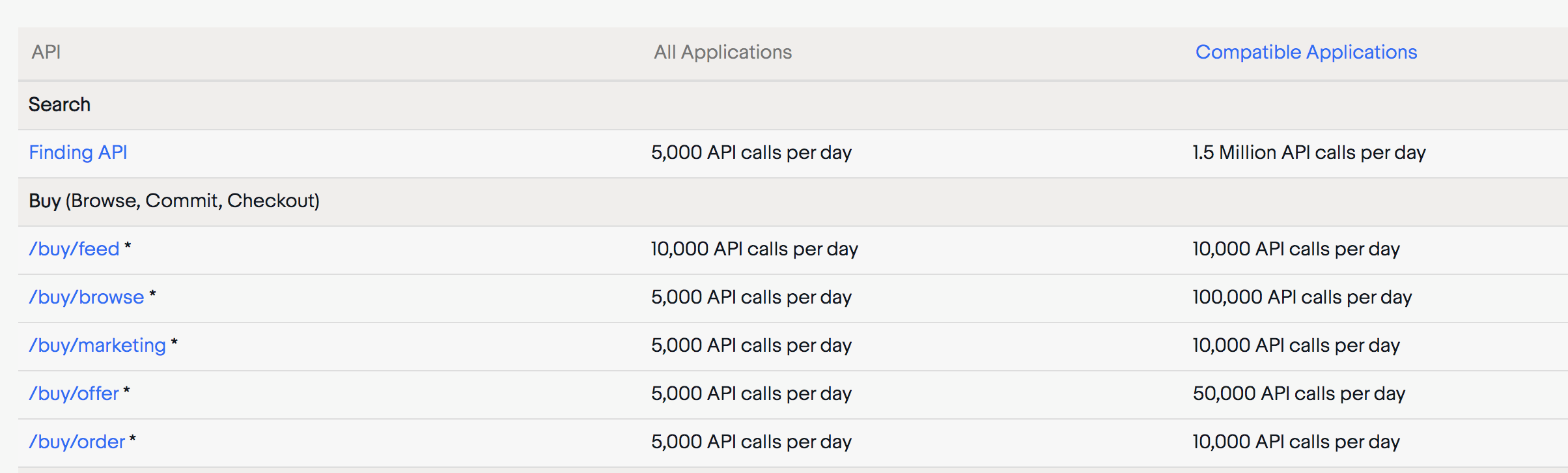

Here is a subset of rate-limiting that Ebay does for some of its API. Compatible Applications are the ones that have signed up with Ebay and have an API key

Tokens sent to the rate-limit engine would be the HTTP header that has the unique token associated with the user. If the token is present in the query string, it can be extracted and sent to the rate limit engine. You can also look at a working example for eBay.

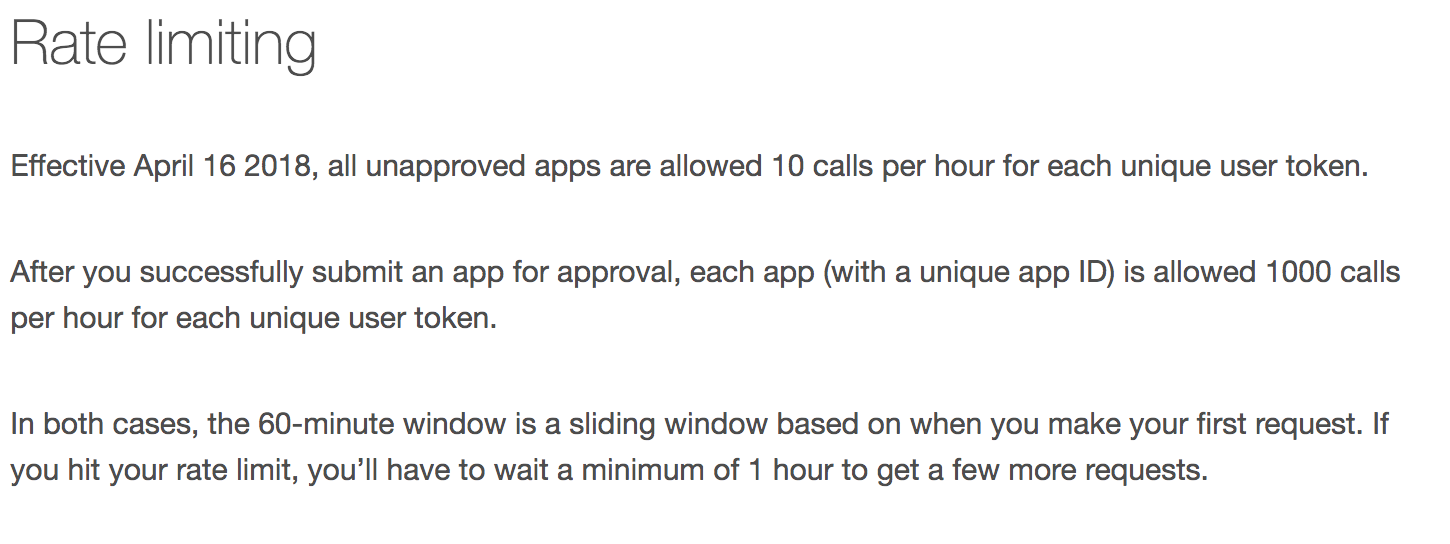

Here is how Pinterest describes rate-limiting it does for some of its API -

This is very similar to eBay above. The token would be the HTTP header or an API key passed as a query string. While eBay uses a bucket duration of a day, pinterest uses an hour. You can also look at the working example for pinterest.

Working code and examples can be found in the article on rate-limiting for Enroute Standalone Gateway and Enroute Kubernetes Ingress Gateway

Rate-limiting is fundamental to APIs

We believe rate-limit is something every API needs. A lot of popular API Gateways (Gloo, Ambassador, Kong), charge for it. We consider rate-limiting is a fundamental feature that should be free. We also plan to open source it eventually.

Run rate-limit anywhere along your traffic path

Enroute Universal Gateway provides the ability to run rate-limit network functions anywhere along the network path. It may be used to protect a service by running it as a side-car to a service. Alternatively, for organizations adopting kubernetes can run it at kubernetes ingress for microservices running inside the cluster. Enroute’s flexibility also allows running rate-limit in standalone in a private cloud or public cloud environment. A single unified mechanism to configure all these makes Enroute Universal Gateway easy to use.

Configuration on Enroute Universal Gateway for above mentioned rate-limits

The following steps are involved in recreating the real-world example:

- Use lua script to extract user-token from request, send it as a header value for rate-limit engine to read

- Add route action to send request state to rate-limit engine when request matches that route

- Add rate-limit engine descriptors to specify limits on user

The first steps can be found in a more detailed write up here We show the rate-limit engine configuration descriptors below -

Ebay

When a rate-limit filter is associated with route with the following value, it sends the header x-app-key and remote_address to the rate-limit engine.

{

"descriptors": [

{

"request_headers": {

"header_name": "x-app-key",

"descriptor_key": "x-app-key"

}

},

{

"remote_address": "{}"

}

]

}The rate-limit engine uses x-app-key and remote_address to build tokens, do the counting and enforce rate limits

{

"domain": "enroute",

"descriptors": [

{

"key": "x-app-key",

"value" : "x-app-notfound",

"descriptors": [

{

"key" : "remote_address",

"rate_limit": {

"unit": "second",

"requests_per_unit": 5000

}

}

]

},

{

"key": "x-app-key",

"descriptors": [

{

"key" : "remote_address",

"rate_limit": {

"unit": "second",

"requests_per_unit": 100000

}

}

]

}

]

}This configuration is the same as the eBay example above.

When a rate-limit filter is associated with route with the following value, it sends the header x-app-key and remote_address to the rate-limit engine.

{

"descriptors": [

{

"request_headers": {

"header_name": "x-app-key",

"descriptor_key": "x-app-key"

}

},

{

"remote_address": "{}"

}

]

}The rate-limit engine uses x-app-key and remote_address to build tokens, do the counting and enforce rate limits. This is also similar to the eBay example but with different limits.

{

"domain": "enroute",

"descriptors": [

{

"key": "x-app-key",

"value" : "x-app-notfound",

"descriptors": [

{

"key" : "remote_address",

"rate_limit": {

"unit": "hour",

"requests_per_unit": 10

}

}

]

},

{

"key": "x-app-key",

"descriptors": [

{

"key" : "remote_address",

"rate_limit": {

"unit": "hour",

"requests_per_unit": 1000

}

}

]

}

]

}Conclusion

We show how API rate-limiting is critical for APIs today and how they can be programmed on the Enroute Universal Gateway. Depending on where the API is running, the standalone gateway or the Kubernetes Ingress API gateway can be used.

Advanced rate-limiting can be run without any inhibitions or licenses on Enroute Universal API gateway. Rate-limiting is fundamental to running APIs and is provided completely free in the community edition (while other vendors charge for it).

Enroute provides a complete rate-limiting solution for APIs with centralized control for all aspects of rate-limiting. With Enroute’s flexibility the need for rate-limiting for APIs can be achieved in any environment - private cloud, public cloud, kubernetes ingress or gateway mesh.

You can use the contact form to reach us if you have any feedback.