Zero trust and genesis of service mesh

Zero trust and genesis of service mesh

Introduction

CNCF survey marks a strong momentum of Service Mesh. 2020 survey indicated 27% use service-mesh in production, while 2021 survey puts that number at 36%

For Networking and security use-cases in a Cloud-Native environment, Service Mesh is a key consideration in addition to the choice of Container Networking Interface (CNI) and an Ingress Controller

The Global Service Mesh market is set to see over a 41% CAGR and will reach 1.4B by 2027, according to a research report by 360 Research Securing workloads and zero trust are top concerns in the minds of CIOs and CISOs fuelling this growth.

What this article covers

While Service Meshes have been around, what makes them more interesting recently? We’ll go over how the Service Mesh has evolved to what it is today.

Some of the recent attacks in 2021 like Solar Wind and log4jshell have put cybersecurity at the center of every IT initative. We discuss what is zero trust and how it is critical in today’s distributed infrastructure that runs across clouds.

Microservice architectures need zero trust because of a higher attack surface area. Service Meshes are a key technology to enforce zero trust principles in a microservices environment

Cryptographically verifiable cloud native ephermal workload identities are critical to build trust and enforce authentication and authorization. We look at the SPIFFE standard (and SPIRE runtime) that does that.

EnRoute OneStep is built on Envoy proxy, which is also one of the popular choices to run as a sidecar proxy. While there have been concerns around complexity of Envoy based service-meshes, we discuss some initiatives that are addressing this.

EnRoute integrates with both light weight Linkerd service mesh and Istio to achieve end-to-end encryption, the last section provides links on integration details.

Distributed Software and RPC

A lot of features today seen in service mesh were a part of RPC frameworks. Let us take a few of popular RPC frameworks (using github stars) - eg: Apache Dubbo, Twitter Finagle, gRPC.

They all share features which look like what service mesh does today -

| Apache Dubbo | Twitter Finagle | gRPC | |

|---|---|---|---|

| Load Balancing | LB,LB-ext | Aperture LoadBalancer | per-call LB |

| Limits / Timeouts / Deadlines | Timeout | Finagle Servers with limits | Deadlines |

| Observability / Monitoring | Build extension to monitor | Observability Support | Intercept to monitor |

The above are some of the features which are useful when building distributed systems. However, these libaries have to be maintained and integrated with distributed software.

Creating a language and library agnostic mechanism, to support the above features independent of the application lifecycle would add the convenience while providing the necessary features.

The genesis of Service Mesh

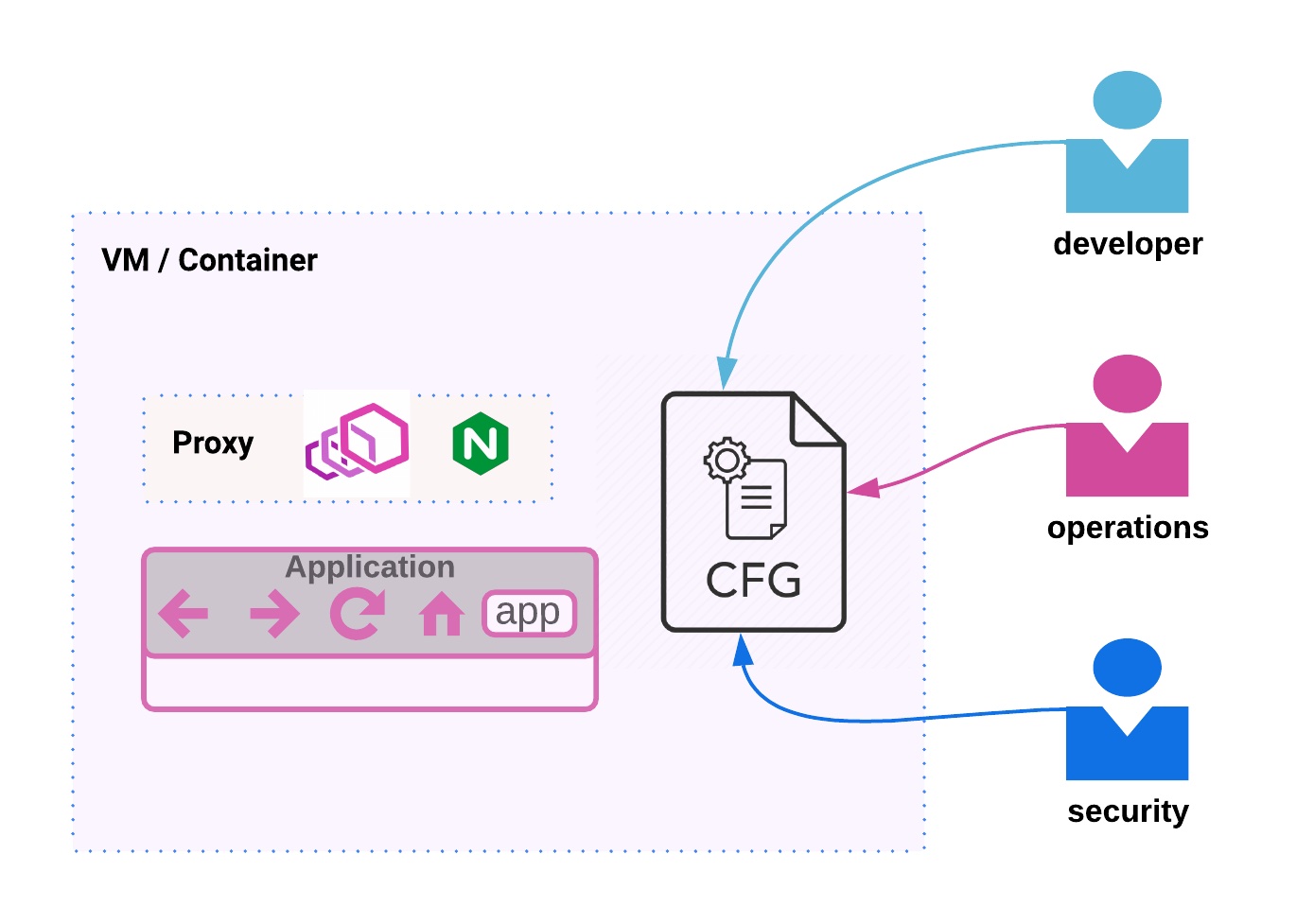

Before service mesh evolved into a pattern, practitioners (developers / software architects) realized that packaging a proxy along with their application would help them achieve such needs of distributed systems without the overhead of managing libraries. It used to be common for a developer to package a proxy like nginx or envoy, along with a configuration file that would front the application written by the developer. So a developer who built the service would -

- Package a new version of the software (say for a VM or a container)

- Would write a file that encoded the information about the application (eg: the ports opened on the application)

- Would have the proxy read the configuration file to front the application

- Have the proxy post updates or metrics to a control plane

A part of configuration would be driven by the developer (which was specific to their application). Parts of configuration would be driven by operations or security teams to build observability and other aspects into the system.

Managing these proxies would need in-house code that would form a control plane for these proxies.

Service Mesh

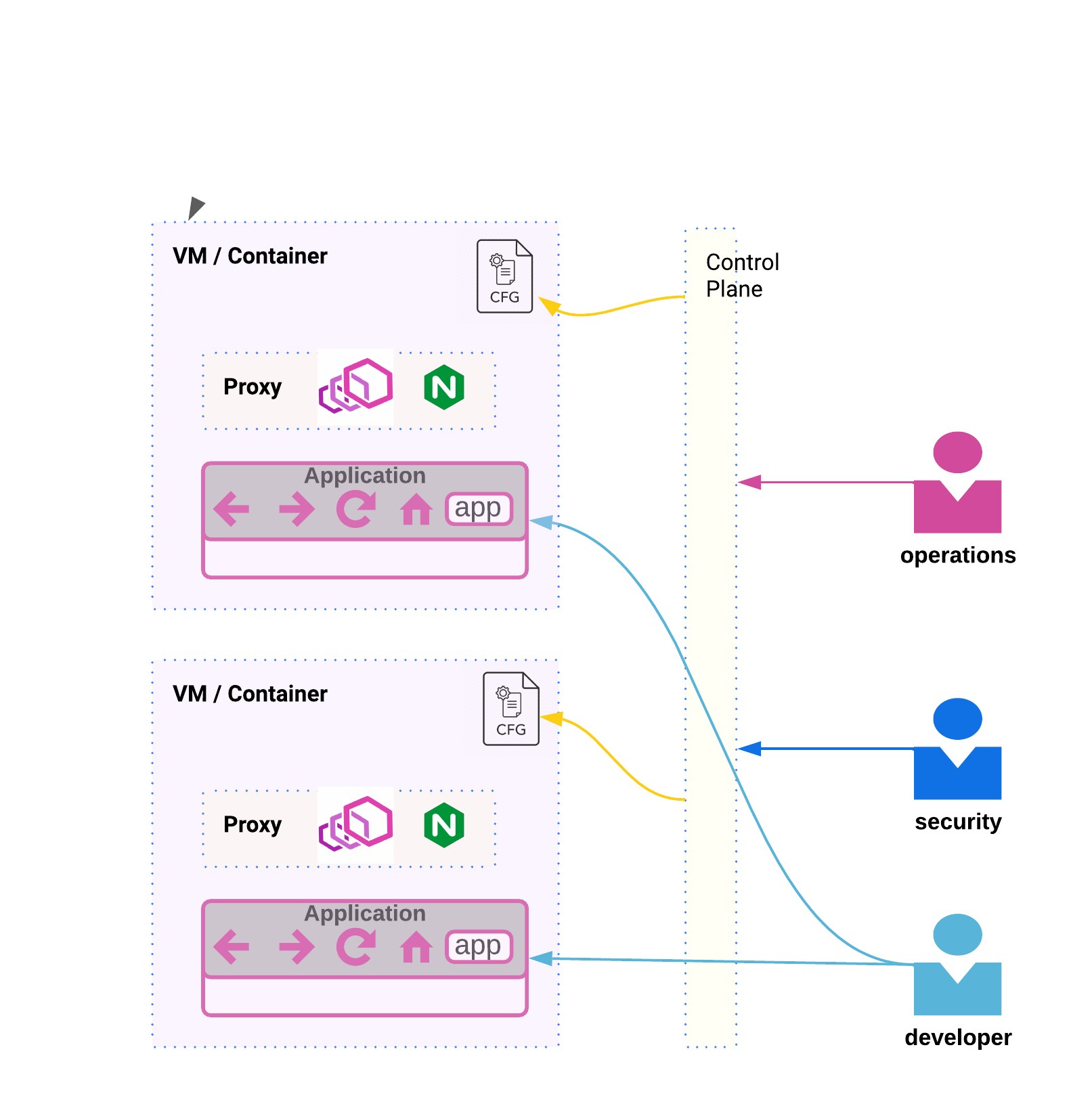

A service mesh is a collection of proxies that run along with a service. There is a control plane to configure and centrally manage this configuration, lifecycle and operational aspect of these proxies. Teams wouldn’t have to build custom control planes to configure and run their proxies. Service mesh also simplifies upgrades which are a lot easier when a library doesn’t have to be complied with the application.

Teams now have a platform in a set of proxies they can configure for several features. The rich set of functionality provided by service mesh makes it valuable for several teams -

- Observability is valuable for both the operations team and the developer. On occasions when the developer owns the service right from development, to deployment to operating it, it also benefits the developer

- Security / SecOps can add key security requirements from a service mesh (more on it below)

- Platform Operations teams may work with the service mesh to define centralized policies that may need to be enforced across the organization

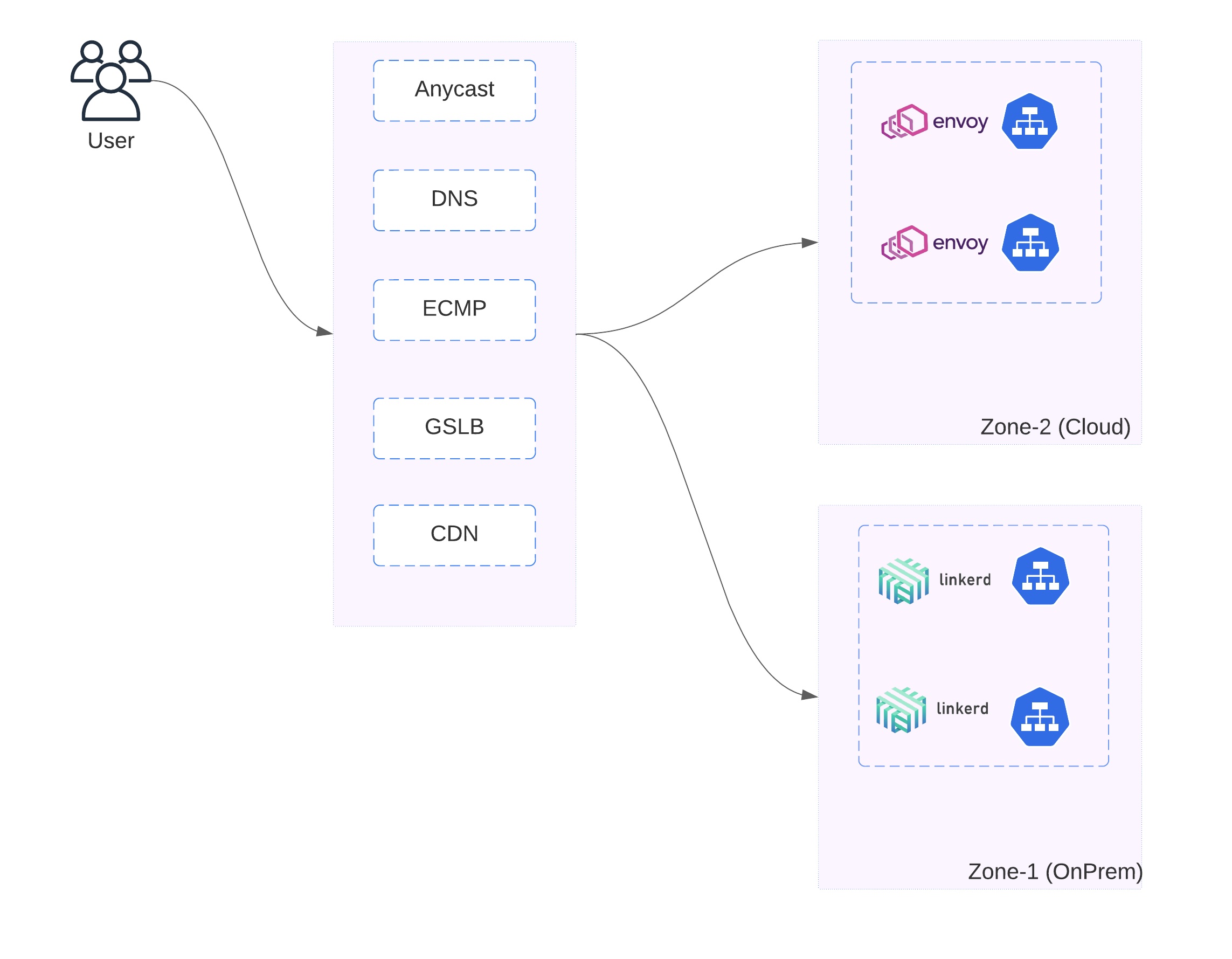

- In case of multi-cloud and hybrid-cloud scenarios, the cloud operations teams may work with multiple cloud(s) and may have to work with different kinds of service meshes.

A solution architecture with multi-cloud and multiple service meshes may look like -

Need for Zero Trust

Last year started while recovering from some of the biggest attacks like SolarWind. The attack included high profile victims like Microsoft, Department of Homeland Security (DHS) and several others

The year ended with Log4jshell which impacted thousands of packages.

The move to microservices and distributed architecture further increases this attack surface, further increasing the risk. The problems that were earlier solved once for the monolith, now have to be solved for microservices. With an increased attack surface, the castle-and-moat approach isn’t an effective strategy to secure a distributed microservices environment.

The idea of de-perimeterization was published in 2007 and then in NIST special publication which evolved and improved to Zero Trust.

Zero Trust assumes lack of trust and involves verification of every request, with the defining principle being “never trust, always verify”

Implementing Zero Trust

To build trust between services and users, there needs to be a way to authenticate and authorize the services and users. An inventory of services and users is necessary with an identity provider and a policy enforcement entity that helps verify these identities. Additionally building a least privilege access policy design needs a way to centrally define and manage these policies

Zero Trust in microservices with Service Mesh

Zero trust principles can be applied to network security and microservices using the service mesh. The first level of control is for traffic entering the cluster. EnRoute OneStep is a rich Ingress Controller API Gateway that in addition to other features, can help with enforcing zero trust principles at the Ingress

For traffic inside the cluster, a service mesh can simplify security by providing the necessary workload identity, authenticating the identity for inter-service communication and authorizing requests. The proxies of service mesh that help provide operational capabilties, can along with a framework for trust provide the necessary infrastruture to implement zero trust.

Mutual TLS (mTLS) encompasses per-workload identity enforced using TLS. While authentication and authorization needs are met, traffic is also encrypted between services. It forms the identity based segmentation and tightens the segmentation boundaries from L3 network domains to higher up L7+ identity domains

Ephermal Workload Identity - SPIFFE

Authentication and Authorization form the key requirement for Zero Trust architecture. A workload identity is necessary to achieve access control, mutual TLS (mTLS). SPIFFE is a set of standards that define the workload identity, encoding it in a cryptographically verifiable document X.509-SVID and a mechanism for workloads to retrieve their identities

Reference architectures

Google’s BeyondCorp provides a reference architecture to adopt and implement Zero Trust.

The DoD’s Enteprise DevSecOps initiatives lays out a reference architecture for building a secure Zero Trust platform (also used for defense applications).

Service Mesh Complexity and Simplifying Service Mesh

Envoy has emerged as an extremely popular choice as a service proxy. The deep observability coupled with it’s extensibility make it ideal for microservices traffic management - both for North-South and East-West traffic.

EnRoute OneStep Kubernetes Ingress API Gateway is also built on the powerful and elegant Envoy Proxy.

While Envoy is a popular choice of proxy to run as a mesh-proxy, there have been efforts to further simplify this solution. Broadly speaking, these initiatives are centered around -

Running fewer Envoys for the mesh

One of the concerns that has been voiced on multiple occasions (1,2) is the memory footprint of an Envoy proxy when running in a mesh. There are recommendations to control / tune the side-car Envoy proxy deployments.

One particular effort is a tighter integration of CNI (that is aware of service identities) with Envoy using eBPF. The aspect of running eBPF logic per-Node provides the capability to run an Envoy proxy per-Node while still maintaining the per-pod demuxing abilities. This is particularly useful since it can allow specifying per-pod, per-service policies while running an Envoy proxy per node

gRPC with Envoy xDS

A KubeCon talk by Megan Yahya of Google introduced configuring gRPC using xDS. Aspects of an Envoy proxy based mesh can be moved to gRPC by having gRPC configured using xDS, the control plane specification that interfaces Envoy Proxy for configuration. This can eliminate complexities arising from injecting a proxy and managing their lifecycle. For example, the gRPC Proposal corresponding outlier detection in Envoy Proxy, RBAC, circuit breaking, http filters and several others